Table of Contents

ChatGPT—the AI that writes poems, codes websites, and engages in surprisingly human-like conversations—can sometimes seem magical. But behind its impressive abilities lies a powerful type of generative AI known as a GPT, or Generative Pre-trained Transformer. Understanding how generative AI like GPT works can transform that sense of magic into an appreciation for the engineering behind it.

In this article, we’ll break down the inner workings of GPT step by step, revealing how each part of the process contributes to the generative AI’s ability to generate meaningful responses. By demystifying generative AI, you’ll gain a deeper understanding of how to use it effectively and confidently.

Setting the Stage: From Prompt to Response

Imagine you type this prompt into ChatGPT: “Write a short story about a cat who loves to code.” What happens next is a series of complex steps, each critical to transforming your prompt into a coherent response. Let’s walk through them one by one.

1. Prompt Engineering: More Than Just Words

Your prompt, “Write a short story about a cat who loves to code,” serves as the starting point for the AI’s response. But a prompt can be much more than just text:

- Additional Context: You can also provide links, documents, or other resources to give the AI extra information to reference.

- Multimodal Input (Emerging): In advanced models, prompts can include images or audio, allowing the AI to analyze multiple types of input simultaneously.

In its simplest form, though, the prompt is a piece of text that ChatGPT processes step by step to generate a meaningful output.

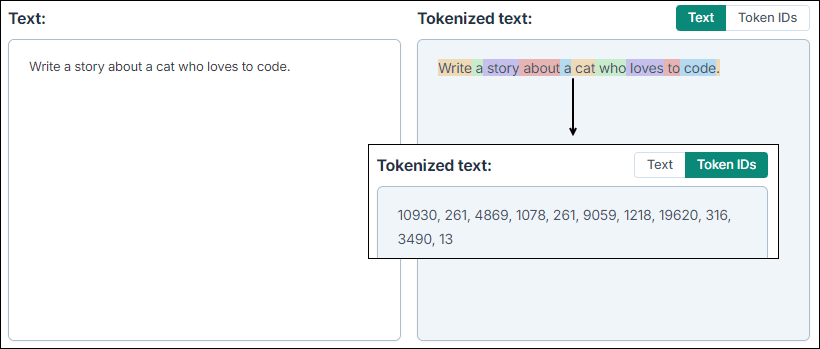

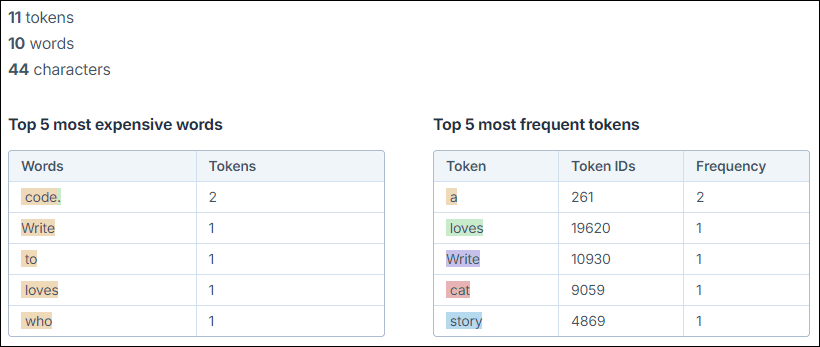

2. Tokenization: Breaking Language Down Into Parts

Before ChatGPT can understand your prompt, it first needs to break it down into smaller units known as “tokens.” In most cases, a token represents a word, a part of a word, or even punctuation. For instance, our prompt might be tokenized into:

["Write", "a", "short", "story", "about", "a", "cat", "who", "loves", "to", "code"]

ChatGPT processes these tokens rather than the original words. This helps the model analyze the text at a more granular level, making it easier to understand complex language patterns.

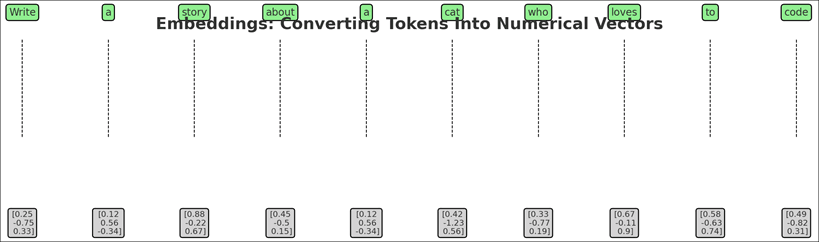

3. Embeddings: Converting Tokens Into Numerical Representations

Once tokenized, the generative AI needs to transform each token into a format it can understand and process. This step is called encoding, where each token is converted into a numerical representation known as an embedding. These embeddings are essentially vectors—lists of numbers—that capture the meaning and relationships of the tokens in a multidimensional space.

Think of embeddings as giving each word a unique “fingerprint” in this vector space. Words that are similar in meaning will have embeddings that are close together in the space. For example, “cat” and “kitten” would have similar embeddings because they share related meanings. The encoding process is crucial because the generative AI “thinks” in numbers, not words, and these numerical vectors enable the model to process language mathematically.

4. The Transformer: Understanding the Context

The “Transformer” is the core of ChatGPT’s architecture. It’s a type of artificial neural network specifically designed for understanding sequences, such as the order of words in a sentence. The Transformer has a crucial feature called “attention,” which allows it to:

- Focus on Important Words: The attention mechanism helps the generative AI figure out which words in the prompt are most important and how they relate to each other. For example, in our prompt, the relationships between “cat,” “loves,” and “code” would be particularly relevant.

- Understand Context Over Multiple Turns: In conversations, the model takes into account not only the current prompt but also the previous exchanges to maintain a coherent dialogue.

This attention-based analysis enables the generative AI to understand complex instructions and respond appropriately.

“The “Transformer” is the core of ChatGPT’s architecture. It’s a type of artificial neural network specifically designed for understanding sequences, such as the order of words in a sentence.”

5. Prediction: Choosing the Next Word

Now that the model has a numerical understanding of your prompt, it moves on to generating a response. The generative AI doesn’t just pick a word at random—it calculates the probability of various words appearing next, based on the prompt and everything generated so far.

For each position in the sentence, the model predicts the most likely word given the previous words. For instance, after the prompt “A cat who loves to,” it might determine that “code” is more likely to follow than “sleep.”

6. Generation: Constructing the Response Word by Word

As the generative AI generates the response, it performs a process known as decoding. Decoding involves converting the internal numerical representations (embeddings) back into human-readable text, one word at a time. The model takes the encoded vectors and predicts the next word based on the prompt and all previous words generated so far.

For each step, the generative AI evaluates the probabilities of different words appearing next, given the context. It chooses the word with the highest probability and continues this process until it completes the response. For example, starting from “A cat who loves to,” the AI might determine that “code” is the most probable next word. As it generates each word, it decodes the underlying numerical information to construct a coherent and meaningful sentence.

This word-by-word decoding continues until the model reaches a natural stopping point, such as a period or the end of a thought.

Advanced Features: Handling Optional Inputs

In more sophisticated scenarios, ChatGPT can handle additional inputs beyond the basic prompt:

- Referencing Documents or Links: The generative AI can cross-check provided documents or web content to ensure its responses are aligned with external information.

- Multimodal Input (Future Capabilities): Advanced models may soon be able to analyze and generate content based on multiple types of inputs, such as combining text with images or audio.

These capabilities make the generative AI more versatile, allowing it to perform tasks like summarizing articles or answering questions based on complex datasets.

Pre-Training: The Foundation of Generative AI’s Abilities

Before interacting with users, GPT models undergo extensive “pre-training.” During this phase, the model is exposed to vast amounts of text data—think billions of words from books, articles, websites, and other sources. This training enables it to:

- Learn Grammar and Syntax: The model internalizes the rules of language, allowing it to construct grammatically sound sentences.

- Understand Context and Nuances: Exposure to a variety of texts helps the model grasp subtle differences in meaning, idioms, and even humor.

- Mimic Different Writing Styles: By “reading” content from various genres and formats, the model learns to replicate different styles, from formal business writing to casual conversations.

Pre-training equips the generative AI with the knowledge to respond to a wide range of prompts, even if it hasn’t been explicitly taught the answer.

Demystifying the “Magic”: Practical Takeaways

By understanding the steps involved in how GPT works—from tokenization to attention to probability-driven generation—you can gain a clearer picture of what makes these systems so powerful. Here’s how this knowledge benefits you:

- Crafting Better Prompts: Clear, specific instructions will yield more accurate and relevant responses.

- Recognizing Limitations: Even with pre-training, GPT can make mistakes, show bias, or struggle with highly specialized topics.

- Using AI Responsibly: Double-check outputs for accuracy, be mindful of ethical considerations, and use generative AI as a tool to complement your skills rather than replace them.

Looking Ahead: The Evolving World of AI

As AI technology continues to evolve, GPT models will become even more capable, potentially integrating new types of inputs and expanding their understanding of complex tasks. By pulling back the curtain on how these models work, you empower yourself to navigate this fast-changing landscape with greater confidence.

Note: AI tools supported the brainstorming, drafting, and refinement of this article.

Jacob is a seasoned IT professional with 20+ years of experience and a proven track record of driving business value in the financial services sector. His extensive expertise spans Business Analysis, Knowledge Management, and Solution Architecture. Skilled in UX/UI design and rapid prototyping, he leverages comprehensive experience with ServiceNow and ITSM competencies. Jacob’s passion for AI is reflected in his Azure AI Engineer Associate certification.